The Project Gutenberg eBook of Physical significance of entropy or of the second law, by John Frederick Klein

Title: Physical significance of entropy or of the second law

Author: John Frederick Klein

Release Date: July 28, 2023 [eBook #71291]

Language: English

Credits: MWS, Laura and the Online Distributed Proofreading Team at https://www.pgdp.net (This file was produced from images generously made available by The Internet Archive/Canadian Libraries)

BY

Professor of Mechanical Engineering,

Lehigh University

NEW YORK

D. VAN NOSTRAND COMPANY

23 MURRAY AND 27 WARREN STREETS

1910

COPYRIGHT, 1910,

BY

JOSEPH FREDERICK KLEIN

THE SCIENTIFIC PRESS

ROBERT DRUMMOND AND COMPANY

BROOKLYN, N. Y.

In this little book the author has in the main sought to present the interpretation reached by BOLTZMANN and by PLANCK. The writer has drawn most heavily upon PLANCK, for he is at once the clearest expositor of BOLTZMANN and an original and important contributor. Now these two investigators reach the result that entropy of any physical state is the logarithm of the probability of the state, and this probability is identical with the number of "complexions" of the state. This number is the measure of the permutability of certain elements of the state and in this sense entropy is the "measure of the disorder of the motions of a system of mass points." To realize more fully the ultimate nature of entropy, the writer has, in the light of these definitions, interpreted some well-known and much-discussed thermodynamic occurrences and statements. A brief outline of the general procedure followed will be found on p. 3, while a fuller synopsis is of course given in the accompanying table of contents.

J. F. Klein.

Lehigh University, October, 1910.

[Pg iii]| PAGE | ||

| INTRODUCTION | ||

| Purpose, acknowledgments, the two methods of approach and outline of treatment | 1 | |

| PART I | ||

| THE DEFINITIONS, GENERAL PRELIMINARIES, DEVELOPMENT, CURRENT AND PRECISE STATEMENTS OF THE MATTERS CONSIDERED | ||

| SECTION A | ||

| (1) The "state" of a body and its "change of state" | 5 | |

| The two points of view; the microscopic and the macroscopic observer; the micro-state and macro-state or aggregate | 5 | |

| The selected and the rejected micro-states; the use of the hypothesis of "elementary chaos" | 7 | |

| PLANCK'S fuller description of what constitutes the state of a physical system | 10 | |

| (2) Further elucidation of the essential prerequisite, "elementary chaos." Sundry aspects of haphazard | 11 | |

| BOLTZMANN'S service to science in this field and his view of what constitute the necessary features of haphazard | 12 | |

| BURBURY'S simplification of haphazard necessary and his example of "elementary chaos" | 15 | |

| Haphazard as expressed by a system possessing an extraordinary number of degrees of freedom | 17 | |

| (3) Settled and unsettled states; distinction between final stage of "elementary chaos" and its preceding stages | 18 | |

| Each stage has sufficient haphazard; examples and characteristics of the settled and unsettled stages of "elementary chaos"; all micro-states not equally likely; the assumed state of "chaos" does not eliminate adequate haphazard; two anticipatory remarks | 19 | |

| SECTION B | ||

| CONCERNING THE APPLICATION OF THE CALCULUS OF PROBABILITIES | ||

| (1) The probability concept, its usefulness in the past, its present necessity, and its universality | 22 | |

| Popular objection to its use; Boltzmann's justification of this concept; its usefulness in the past and in other fields; some of its good points; the haphazard features necessary for its use | 23 | |

| (2) What is meant by probability of a state? Example | 27 | |

| SECTION C | ||

| (1) The existence, definition, measure, properties, relations and scope of irreversibility and reversibility | 29 | |

| Inference from experience; inference from the H-theorem or calculus of probabilities; definitions of irreversible and reversible processes; examples of each | 30 | |

| (2) Character of process decided by limiting states | 32 | |

| Nature's preference for a state; measure of this preference | 33 | |

| Entropy both the criterion and the measure of irreversibility | 33 | |

| (3) All the irreversible processes stand or fall together | 34 | |

| (4) Convenience of the fiction, the reversible processes | 35 | |

| Entropy the only universal measure of irreversibility. Outcome of the whole study of irreversibility | 36 | |

| SECTION D | ||

| (1) The gradual development of the idea that entropy depends on probability or number of complexions | 37 | |

| Why it is difficult to conceive of entropy. Origin and first definition due to CLAUSIUS; some formulas for it available from the start. Its statistical character early appreciated; lack of precise physical meaning; its dependence on probability; number of complexions a synonym for probability | 37 | |

| (2) PLANCK'S formula for the relation between entropy and the number of complexions | 40 | |

| Certain features of entropy | 41 | |

| SECTION E | ||

| Equivalents of change of entropy in more or less general physical terms or aspects | 41 | |

| Not surprising that its many forms should have been a reproach to the second law | 41 | |

| General principles for comparing these aspects. Various aspects of growth of entropy from the experiential and from the atomic point of view | 42 | |

| SECTION F | ||

| More precise and specific statements of the second law | 44 | |

| General arrangement and the principles for comparison | 44 | |

| Ten different statements of the law and comments thereon | 44 | |

| PART II | ||

| ANALYTICAL EXPRESSIONS FOR A FEW PRIMARY RELATIONS | ||

| Procedure followed | 48 | |

| SECTION A | ||

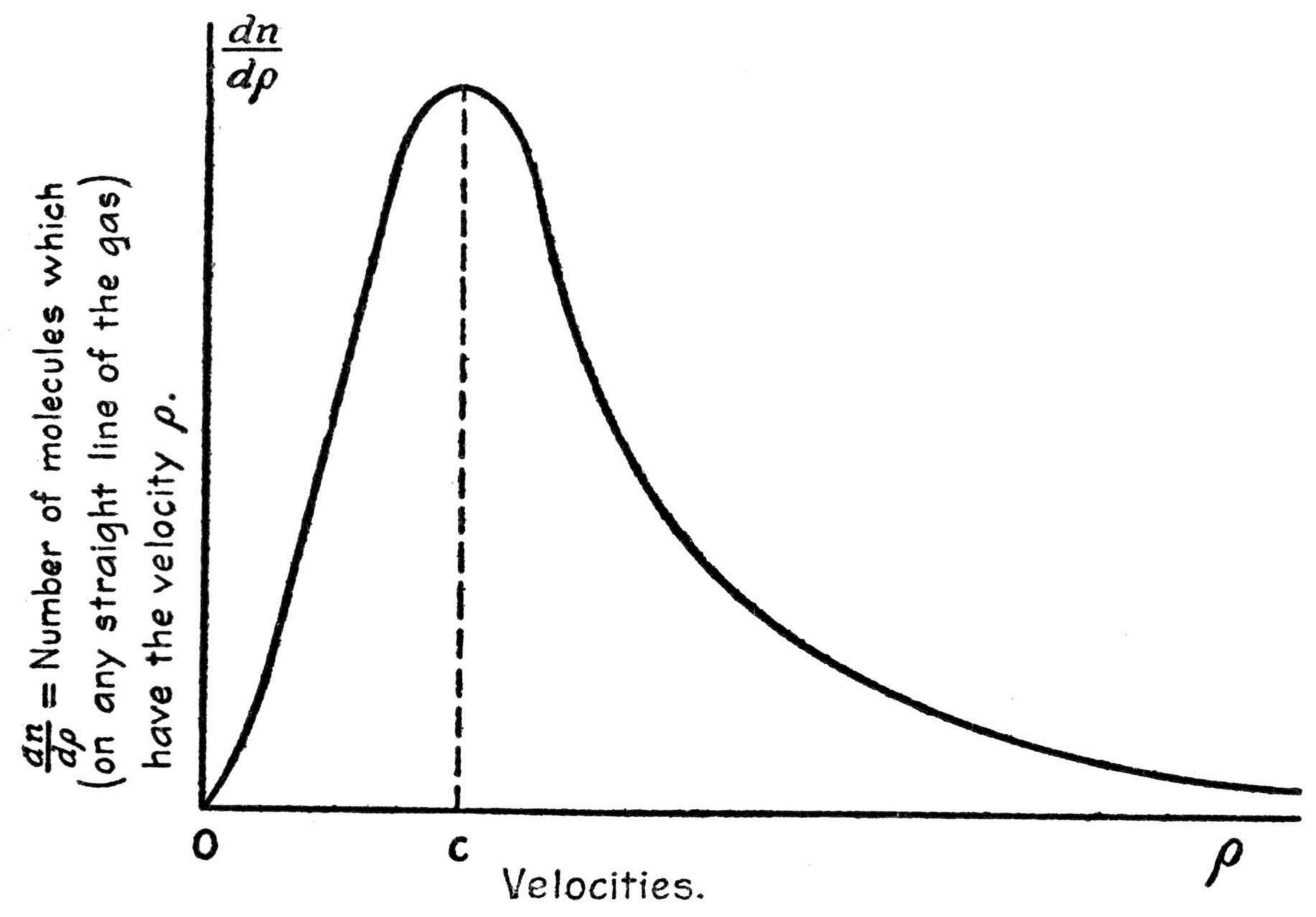

| Maxwell's law of distribution of molecular velocities | 48 | |

| Outline of proof, illustration, and consequences of this law | 48 | |

| SECTION B | ||

| Simple analytical expression for dependence of entropy on probability | 53 | |

| PLANCK'S derivation; illustration, limitations, consequences, features and comments | 53 | |

| SECTION C | ||

| Determination of a precise, numerical expression for the entropy of any physical configuration | 56 | |

| BOLTZMANN'S pioneer work, PLANCK'S exposition, and the six main steps | 56 | |

| Step a | ||

| Determination of the general expression for the

|

57 | |

| Step b | ||

| Determination of the general expression for the entropy |

63 | |

| Step c | ||

| Special case of (b), namely, expression for the entropy |

63 | |

| Step d | ||

| Confirmation, by equating this value of |

64 | |

| Step e | ||

| PLANCK'S conversion of the expressions of (b) and (c) into more precise

ones by finding numerical value of |

66 | |

| Step f | ||

| Determination of the dimensions of the universal constant |

67 | |

| PART III | ||

| THE PHYSICAL INTERPRETATIONS | ||

| SECTION A | ||

| Of the simple reversible operations in thermodynamics | ||

| Isometric, isobaric, isothermal, and isentropic change | 69 | |

| SECTION B | ||

| Of the fundamentally irreversible processes | ||

| Heat conduction, work into heat of friction, expansion without work, and diffusion of gases | 72 | |

| SECTION C | ||

| Of negative change of entropy | ||

| Some of its physical features and necessary accompaniments | 78 | |

| SECTION D | ||

| Physical significance of the equivalents for growth of entropy given on pp. 42-43 | 80 | |

| SECTION E | ||

| Physical significance of the more specific statements of second law given on pp. 44-47 | 81 | |

| PART IV | ||

| SUMMARY OF THE CONNECTION BETWEEN PROBABILITY, IRREVERSIBILITY, ENTROPY, AND THE SECOND LAW | ||

| SECTION A | ||

| (1) Prerequisites and conditions necessary for the application of the theory of probabilities | ||

| (a) Atomic theory; (b) like particles; (c) very numerous particles; (d) "elementary chaos" | 83 | |

| (2) Differences in the states of "elementary chaos" | 85 | |

| (3) Number of complexions, or probability of a chaotic state | 86 | |

| SECTION B | ||

| Irreversibility | 86 | |

| SECTION C | ||

| Entropy | 87 | |

| SECTION D | ||

| The Second Law | ||

| Its basis and best statements; it has no independent significance | 88 | |

| PART V | ||

| REACH OR SCOPE OF THE SECOND LAW | ||

| SECTION A | ||

| Its extension to all bodies | ||

| PLANCK'S presentation; fifteen steps in the proof | 91 | |

| SECTION B | ||

| General conclusion as to entropy changes | 98 |

[There is no difference between change of Entropy and Second Law, when each is fully defined.]

THIS article is intended for those students of engineering who already have some elementary knowledge of thermodynamics. It is intended to clear up a difficulty that has beset every earnest beginner of this subject. The difficulty is not one of application to engineering problems, although here too there have been widespread misconceptions,[1] for the expressions developed by CLAUSIUS are simple, have long been known and much used by engineers and physicists. The difficulty is rather as to the ultimate physical meaning of entropy. This term has long been known as a sort of property of the state of the body, has long been surmised to be of essentially a statistical nature, but with it all there was a sense that it was a sort of mathematical fiction, that it was somehow unreal and elusive, so it is no wonder that in certain engineering quarters it was dubbed the "ghostly quantity."

Now this instinct of the true engineer to understand things [Pg 1] down to the bottom is worthy of all encouragement and respect. For this reason and because the matter is of prime importance to the technical world, the final meaning of entropy (i.e., of the Second Law) must be clarified and realized. Indeed, we may well go beyond this somewhat narrow view and say that this is well worth doing because change of entropy constitutes the driving motive in all natural events; it has therefore a reach and a universality which even transcends that of the First Law, or Principle of the Conservation of Energy.

In striving to present the physical meaning of entropy and of the Second Law, the writer cannot lay claim to any originality; he has simply tried here to put in logical order the somewhat scattered propositions of the leading investigators of this subject and in such a way that the difficulties of apprehension might be minimized; in other words, to present the solutions of his own difficulties, in the hope that the solutions may be helpful to other students of engineering and thermodynamics. In overcoming these difficulties, the writer owes everything to the books and papers by PLANCK and BOLTZMANN, pre-eminently to PLANCK, who has so clearly and appreciatively interpreted the life work of BOLTZMANN.[2] The writer furthermore wishes to say that he has not hesitated here to quote verbatim from both these investigators and not always so that their own statements can be distinguished from his own. If any part of this presentation is particularly clear and exact the reader will be safe in crediting it to one or the other of these two investigators and expositors, although it would not be right to consider them responsible for everything contained in this little book.

In considering the proper approach to the matter in hand we must remember that[3] "in physical science there are two more or [Pg 2] less distinct modes of attack, namely, (a) a mode of attack in which the effort is made to develop conceptions of the physical processes of nature, and (b) a mode of attack in which the attempt is made to correlate phenomena on the basis of sensible things, things that can be seen and measured. In the theory of heat the first mode is represented by the application of the atomic theory to the study of heat phenomena, and the second mode is represented by what is called thermodynamics." In solving the special problem before us, as to the physical meaning of entropy and of the Second Law, our main dependence must be on the first mode of attack.

The second mode will furnish checks and confirmations of the results developed by the first, or we may say that the combination of the two modes will give the well-established characteristic equations and relations of bodies and their physical elements.

The whole discussion will now be taken up in a non-mathematical way, without the full proof required by a complete presentation, and about in this order:

(a) The definitions, general preliminaries and current statements of the matters considered.

(b) More or less precise statement of the primary relations and theorems.

(c) The physical interpretations.

(d) Summary of the connection between probability, irreversibility, entropy and the Second Law.

(e) Reach or scope of the Second Law.

On account of the difficulty which every student experience in realizing the physical nature of entropy we will in the main confine our attention here to gases and indeed to their simplest case, the monatomic gas, and will as usual assume that the dimensions of an atom or particle are very small in comparison with the average distance between two adjacent particles, that for the atoms approaching collision the distance within which they exert a significant influence on each other is very small as compared with the [Pg 3] mean distance between adjacent atoms, and that between collisions the mean length of the particle's path is great in comparison with the average distance between the particles. Later on we will indicate in a very general and brief way how the entropy idea may be extended to other states of aggregation and to other than purely thermodynamic phenomena. Mostly, therefore, we will only consider states and processes in which heat phenomena and mechanical occurrences take place.

[1]See Entropy, by JAMES SWINBURNE; this author has called attention to necessary corrections and duly emphasized the engineering aspect.

[2]BOLTZMANN, Gas Theorie; PLANCK, Thermodynamik, Theorie der Wärmestrahlung, and Acht Vorlesungen über Theoretische Physik.

[3]Professor W. S. FRANKLIN, The Second Law of Thermodynamics: its basis in Intuition and Common Sense. Pop. Science Monthly, March, 1910.

[Pg 4]

(1) The "State" of a Body and its "Change of State"

As we will make constant use of the terms contained in this heading and as they here represent fundamentally important conceptions, we will seek to make them clear by presenting them in the various forms into which they have been cast by the different investigators, even at the risk of being considered prolix.

In the Introduction to this article we called attention to the two distinct modes of attacking any physical problem. Now the conception "state of a body" varies with the chosen mode of attack. Of course as both modes are legitimate and lead to correct results, these differences in the conception of "state" can be reconciled and a broader definition reached. We can illustrate these different methods of approach, as PLANCK has done, by assuming two different observers of the state of the body, one called the microscopic-observer and the other the macroscopic-observer. The former possesses senses so acute and powers so great that he can recognize each individual atom and can measure its motion. For this observer each atom will move exactly according to the elementary laws prescribed for it by General Dynamics. These laws, so far as we know them, also at once permit of exactly the opposite course of each event. Consequently there can be here no question of probability, of entropy or of its growth. On the other hand, the "macro-observer," (who perceives the atomic host, say as a homogeneous gas, and consequently applies to its mechanical and thermal [Pg 5] events the laws of thermodynamics) will regard the process as a whole to be an irreversible one in accordance with the Second Law.... Now a particular change of state cannot at the same time be both reversible and irreversible. But the one observer has a different idea of "change of state" from the other; the micro-observer's conception of "change of state" is different from that of the macro-observer. What then is "change of state?" The state of a physical system can probably not be rigorously defined, otherwise than the conception, as a whole, of all those physical magnitudes whose instantaneous values, under given external conditions, also uniquely determine the sequence of these changing values.

BOLTZMANN'S statement is much more clear, namely, "The state of a body is determined, (a) by the law of distribution of the particles in space and (b) by the law of distribution of the velocities of the particles; in other words, a body's condition is determined (a) by the number of particles which lie in each elementary realm of the space and (b) by a statement of the number of particles which belong to each elementary velocity group. These elementary realms are all equal and so are the elementary velocity groups equal among themselves. But it is furthermore assumed that each elementary realm and each elementary velocity group contains very many particles."

Now if we ask the aforesaid two observers what they understand by the state of the atomic host or gas under consideration, they will give entirely different answers. The micro-observer will mention those magnitudes which determine the location and the velocity condition of all the individual atoms. This would mean in the simplest case, in which the atoms are regarded as material points, that there would be six times as many magnitudes as atoms present, namely, for each atom there would be three co-ordinates of location and three of velocity components; in the case of composite molecules there would be many more such [Pg 6] magnitudes. For the micro-observer, the state and the sequence of the event would not be determined until all these many magnitudes had been separately given. The state thus defined we will call the "micro-state." The macroscopic-observer on the other hand gets along with much fewer data; he will say that the state of the contemplated homogeneous gas is already determined by the density, the visible velocity and the temperature at each place of the gas and he will expect, when these magnitudes are given, that the course of the physical events will be completely determined, namely, will occur in obedience to the two laws of thermodynamics and therefore be bound to show an increase in entropy. The state thus defined we will call the "macro-state." The difference in the two observers is that one sees only the atomic events and the other the occurrences in the aggregate. The former would have the absolute mechanical idea of state and the latter the statistical idea. Before attempting to reconcile their apparently conflicting conclusions, we will here call attention to some necessary relations between the micro-state and the macro-state. In the first place we must remember that all a priori possible micro-states are not realized in nature; they are conceivable but never attain fruition. How shall we select what may be called these natural micro-states? The principles of general dynamics furnish no guide for such selection and so recourse may be had to any dynamic hypothesis whose selection will be fully justified by experience.

Now PLANCK says: "In order to traverse this path of investigation, we must evidently first of all keep in mind all the conceivable positions and velocities of the individual atoms, which are compatible with particular values of the density, the velocity and the temperature of the gas, or, in other words, we must consider all the micro-states which belong to a particular macro-state and must examine all the different events which follow from the different micro-states according to the fixed laws of dynamics. Now up to this time, the closer calculation and combination [Pg 7] of these minute elements has always given the important result that the vast majority of these micro-states belong to one and the same macro-state or aggregate, and that only comparatively few of the said micro-states furnish an anomalous result, and these few are characterized by very special and far-reaching conditions existing between the locations and the velocities of adjacent atoms. And, furthermore, it has appeared that the almost invariably resulting macro-event is just the very one perceived by the macroscopic observer, the one in which all the measurable mean values have a unique sequence, and consequently and in particular satisfies the second law of thermodynamics."

"Herewith is revealed the bridge of reconciliation between the two observers. The micro-observer needs only to take up in his theory the physical hypothesis, that all such particular cases (which premise very special, far-reaching conditions between the states of adjacent and interacting atoms) do not occur in Nature; or in other words, the micro-states are in 'elementary disorder' (elementar ungeordnet). This secures the unique (unambiguous) character of the macroscopic event and makes sure that the Principle of the Growth of Entropy will be satisfied in every direction."

Before elaborating all that is implied in this hypothesis of "elementary disorder" we will again point out that for each macro-state (even with settled values of density and temperature) there may be many micro-states which satisfy it in the aggregate.

According to PLANCK, "it is easy to see that the macro-observer deals with mean values; for what he calls density, visible velocity, temperature of the gas, are for the micro-observer certain averages, statistical data, which have been suitably obtained from the spatial arrangement and the velocities of the atoms. But with these averages the micro-observer at first can do nothing even if they are known for a certain time, for thereby the sequence of events is by no means settled; on the contrary, he can easily [Pg 8] with said given averages ascertain a whole host of different values for the location and velocities of the individual atoms, all of which correspond to said given averages, and yet some of these lead to wholly different sequences of events even in their mean values," events which do not at all accord with experience. It is evident, if any progress is to be made, that the micro-observer must in some suitable way limit the manifold character of the multifarious micro-states. This he accomplishes by the hypothesis of "elementary disorder" about to be more fully defined.

In passing we may here note for future use, that what has just been said concerning macro-states (aggregates) with "settled" mean velocity, density and temperature, applies also to states unsettled in the aggregate, so far as concerns the manifold character of the conceivable constituent micro-states and the differences in the mean character of their sequences. Even after the above limiting hypothesis removes all illegitimate micro-states, an enormously greater number of legitimate ones will be left to constitute the number of complexions properly belonging to the state contemplated. We may also add that it seems quite evident that the numbers representing these complexions will be different in the settled and unsettled states even if the latter should ultimately possess the mean velocity, density and temperature of the former.

On the other hand, we also point out that for one and the same set of external conditions the macro-state may itself vary very greatly. When it has a settled density and temperature, it is said to be in a stationary state, to be in thermal equilibrium and, anticipating, we may add that it is then has maximum entropy, in short we may say it is in a "normal" condition. But the external conditions remaining the same, before attaining to said "normal" ultimate state, it may pass through a whole series of so-called "abnormal" states after it leaves its initial condition. While it is in any one of these "abnormal" states, it may be said to be in a more or less turbulent condition; [Pg 9] it may then possess whirls and eddies; it may have different densities and temperatures in its different parts and then it will be difficult or impossible to measure these external physical features of its state as a whole. All this implies ever-varying atomic locations and velocities, but does not indicate any such special far-reaching regularities between adjacent and interacting particles as would vitiate at any stage our hypothesis of "elementary disorder" (elementar ungeordnet) or "molecular chaos."

Before going into more detail concerning this particular chaotic condition of the particles we will give PLANCK'S somewhat fuller statement of what constitutes the "state" of a physical system at a particular time and under given external conditions. It is, "the conception as a whole of all those mutually independent magnitudes which determine the sequence of events occurring in the system so far as they are accessible to measurement; the knowledge of the state is therefore equivalent to a knowledge of the initial conditions. For example, in a gas composed of invariable molecules the state is determined by the law of their space and velocity distribution, i.e., by the statement of the number of molecules, of their co-ordinates and velocity components which lie within each single small region. The number of molecules in any one of these different regions is in general entirely independent of the number in any other region, for the state need not be a stationary one nor one of equilibrium; these numbers should therefore all be separately known if the state of the gas is to be considered as given in the absolute mechanical sense. On the other hand, for the characterization of the state in the statistical sense, it is not necessary to go into closer detail concerning the molecules present in each elementary space; for here the necessary supplement is supplied by the hypothesis of molecular chaos, "which in spite of its mechanically indeterminate character guarantees the unambiguous sequence of the physical events." [Pg 10]

(2) Further Elucidation of this Essential Condition of "Elementary Chaos." Sundry Aspects of Haphazard

To gain as complete an understanding as possible of this fundamental idea we will now give the views of the several investigators as to the physical features of this chaotic state. We have seen how PLANCK, the chief expositor of BOLTZMANN, boldly excludes from consideration all cases leading to anomalous results, because of the very special conditions existing between the molecular data, by assuming that these cases do not occur in Nature. PLANCK reminds the physicists who object to the hypothesis of elementary disorder because they feel it is unnecessary or even unjustifiable, that the hypothesis is already much used in Physics, that tacitly or otherwise it underlies every computation of the constants attached to friction, diffusion and the conduction of heat. On the other hand he reminds others, those inclined to regard the hypothesis of "elementary disorder" as axiomatic, of the theorem of H. POINCARÉ, which excludes this hypothesis for all times from a space surrounded with absolutely smooth walls. PLANCK says that the only escape from the portentous sweep of this proposition is that absolutely smooth walls do not exist in Nature.

The foregoing thought PLANCK has also put in a slightly different way. Appreciating that all mechanically possible simultaneous arrangements and velocities of molecules are not realized in Nature, the concept of "elementary disorder" implies one limitation of the conceivable molecular states, namely that, between the numerous elements of a physical system there exist no other relations than those conditioned by the existing measurable mean values of the physical features of the system in question.

Another, briefer but equivalent, definition is that: "In Nature all states and processes which contain numerous independent (unkontrollierbar) constituents are in 'elementary disorder' (elementar ungeordnet)." The constituents are molecular elements [Pg 11] in mechanics and in thermodynamics and the energy elements in radiation.

The German word "unkontrollierbar"[4] here used may also with some justice be translated as, unconditioned, undetermined, unmeasurable, unregulated, uncorrelated, ungovernable or haphazard. But whichever term is best, PLANCK, mechanically speaking, meant by it, the confused, unregulated and whirring intermingling of very many atoms.

Either of these two equivalent definitions implies that such elementary disorder or chaos is a condition of sufficiently complete haphazard to warrant the application of the Theory of Probabilities to the unique (unambiguous) determination of the measurable physical features of the process viewed as a whole.

The foregoing ideas more or less tacitly underlie the whole of BOLTZMANN'S great pioneer work in this vast field. He it was who clearly showed that the Second Law could be derived from mechanical principles: that entropy was a property of every state, turbulent or otherwise; that the entropy idea would be emancipated from all thought of human, experimental, skill, and who thereby raised the Second Law to the position of a real principle. He did all this by a general basing of the idea of entropy on the idea of probability. Consequently we find much attention paid in all his work to haphazard molecular conditions. He first used the terms "molekular-geordnet" (molecularly ordered, or arranged), and "molekular ungeordnet" (molecularly disordered or disarranged), which latter phrase we must regard as synonymous with the term "elementar ungeordnet" (elementary disorder or chaos) with which we have already become acquainted in PLANCK'S presentation. We will, therefore, confine ourselves here to BOLTZMANN'S illustrations of these terms, for his work does not, in these particulars, contain any [Pg 12] sharp definitions. Indeed he may have feared over-precision and may have trusted to the use he made of the terms at different times to convey their meaning.

Concerning some of the characteristics of BOLTZMANN'S haphazard motion we take the following from Vol. I of his "Vorlesungen über Gas Theorie."

If in a finite part of a gas the variables determining the motion of the molecules have different mean values from those in another finite part of the gas (for example if the mean density or mean velocity of a gas in one-half of a vessel is different from those in the other half), or more generally, if any finite part of a gas behaves differently from another finite part of a gas, then such a distribution is said to be "molar-geordnet" (in molar order). But when the total number of molecules in every unit of volume exists under the same conditions and possesses the same number of each kind of molecules throughout the changes contemplated, then the same number of molecules will leave a unit volume and will enter it so that the total number ever present remains the same; under such conditions we call the distribution "molar-ungeordnet" (in molar disorder) and that finite distribution is one of the characteristics of the haphazard state to which the Theory of Probabilities is applicable. [As another illustration of the excluded molar-geordnet states we may instance the case when all motions are parallel to one plane.]

But although in passing from one finite part to another of a gas no

regularities (of average character) can be discerned, yet infinitesimal

parts (say of two or more molecules) may exhibit certain regularities,

and then the distribution would be "molekular-geordnet"

(molecularly-ordered) although as a whole the gas is "molar-ungeordnet."

For example (to take one of the infinite number of possible cases)

suppose that the two nearest molecules always approached each other

along their line of centers, or if a molecule moving with a particularly

slow speed always had ten (10) slow neighbors, then the distribution

[Pg 13]

would be "molekular-geordnet." But then the locality of one molecule

would have some influence on the locality of another molecule and then

in the Theory of Probabilities the presence of one molecule in one place

would not be independent of the presence of some other molecule in some

other place. Such dependence is not permissible by the Theory of

Probabilities. Before, however, we can further describe what is here

perhaps the most important term (molekular-ungeordnet), we must point

out that BOLTZMANN considers the number of molecules of one kind

whose component velocities along the co-ordinate axes are confined

between the limits,

and also the number of molecules

of another kind whose

component velocities similarly lie between the limit

then, considering the chances that a molecule

shall have

velocities between the limits specified in (1) and molecule

have velocities between limits (2), BOLTZMANN intimates that these

chances are independent of the relative position of the molecules.

Where there is such complete independence, or absence of all

minute regularities, the distribution, according to BOLTZMANN,

is "molekular-ungeordnet" (molecularly-disordered).

BOLTZMANN furthermore informs us that, as soon as in a gas, the mean length of path is great in comparison with the mean distance between two adjacent molecules, the neighboring molecules will quickly become different from what they formerly were. Therefore it is exceedingly probable that a "molekular-geordnete" (but molar-ungeordnete) distribution would shortly pass into a "molekular-ungeordnete" distribution.

Furthermore, from the constitution of a gas results that the place where a molecule collided is entirely independent of the spot where its preceding collision took place. Of course, this [Pg 14] independence could be maintained for an indefinite time only by an infinite number of molecules.

The place of collision of a pair of molecules must in our Theory of Probabilities be independent of the locality from which either molecule started.

From all the preceding we must infer what measure of haphazard BOLTZMANN considers necessary for the legitimate use of the Theory of Probabilities.

BOLTZMANN in proving his H-Theorem,[5] which establishes the one-sidedness of all natural events, makes the explicit assumption that the motion at the start is both "molar- und molekular-ungeordnet" and remains so. Later on, he assumes the same things but adds that if they are not so at the start they will soon become so; therefore said assumption does not preclude the consideration by Probability methods of the general case or the passage from "ordnete" to "ungeordnete" conditions which characterizes all natural events.

In fact these very definitions show solicitude for securing the uninterrupted operation of the laws of probability. BOLTZMANN intimates his approval of S. H. BURBURY'S statement of the condition of independence underlying his work.

Here S. H. BURBURY[6] simplifies the matter by assuming that any unit of volume of space contains a uniform mixture of differently speeded molecules and then says:

"Let be the velocity of the center of gravity of any pair of

molecules and

their relative velocity. Then the following condition

(here called

) holds: For any given direction of

before collision, all directions of

after collision are

equally probable. Then BOLTZMANN'S H-theorem proves that if condition

be satisfied, then if all directions of the relative velocity

for given

are not equally likely, the effect of collisions

[Pg 15]

is to make

diminish." [In essence BURBURY'S condition

says

no more than that Theory of Probabilities is applicable for finding

number of collisions.] Furthermore, "any actual material system receives

disturbances from without, the effect of which coming at haphazard

without regard to state of system for the time being is, pro

tanto, to renew or maintain the independence of the molecular

motions, that very distribution of co-ordinates (of collision) which is

required to make

diminish. So there is a general tendency for

to diminish, though it may conceivably increase in particular

cases. Just as in matters political, change for the better is possible,

but the tendency is for all change to be from bad to worse." Here

BURBURY states what is practically true in all actual cases and thus

furnishes an additional reason, if that were needed, for the legitimacy

of the Probability method pursued by Boltzmann, and, another explanation

of why the results obtained are in such perfect accord with experience.

As BURBURY'S remarks with respect to the nature of "elementary chaos" under consideration are always illuminating, we will, at the risk of repeating something already said, quote the following:

"The chance that the spheres approaching collision shall have velocities within assigned limits is independent of their relative position, and of the positions and velocities of all other spheres, and also independent of the past history of the system except so far as this has altered the distribution of the velocities inter se. In the following example this independence is satisfied for the initial state and, for the assumed method of distribution, has no past history.

"Example. A great number of equal elastic spheres, each of

unit mass and diameter , are at an initial instant set in motion

within a field

of no force and bounded by elastic walls. The

initial motion is formed as follows: (1) One person assigns component

velocities

to each sphere according to any law subject

to the conditions that

and that

[Pg 16]

given constant. (2) Another person,

in complete ignorance of the velocities so assigned, scatters the spheres

at haphazard throughout

. And they start from the initial

positions so assigned by (2) with the velocities assigned to them

respectively by (1)."

The system thus synthetically constructed would without doubt, at the start be "molekular-ungeordnet"—in fact, it is as near an approach to chaos as is possible in an imperfect world. But there is reason to doubt if it would continue to be thus "molekular-ungeordnet." For the distribution of velocities is according to any law consistent with the above-mentioned conditions and some such laws would lead to results hostile to the Second Law, and then we may safely say such laws of velocity distribution would never occur in Nature and would therefore belong to the cases which have been specially excepted.

Now there are mechanical systems which possess the entropy property and it has been truly said that the Second Law and irreversibility do not depend on any special peculiarity of heat motion, but only on the statistical property of a system possessing an extraordinary number of degrees of freedom. In this sense Professor J. W. GIBBS treated Mechanics statistically and showed that then the properties of temperature and entropy resulted. This matter has already been touched upon, but as numerous degrees of freedom is a feature of the "elementary chaos" under consideration it deserves repetition here and more than a passing mention.

Illustration of Degrees of Freedom. Refer a body's motion to

three axes, . If a body has as general a motion as possible, it

may be resolved into translations parallel to the

axes

and to rotations about these axes. Each of these two sets furnishes

three components of motion or a total of six components; then

we say that the perfectly unconstrained motion of the body has

six degrees of freedom. If a body moves parallel to one of the

co-ordinate planes, we say it has two degrees of freedom. When

[Pg 17]

we come to consider molecular motion in general and the independence

which characterizes the motion of each of the many molecules we see that

altogether we have here an extraordinary number of degrees of freedom, and

composed of such is the realm of our "elementary chaos."

If we go to the other extreme and think of only one atom, we see at once that we cannot properly speak of its disorder. But the case is different with a moderate number of atoms, say, a hundred or a thousand. Here we surely can speak of disorder if the co-ordinates of location and the velocity components are distributed by haphazard among the atoms. But as the process as a whole, the sequence of events in the aggregate, may not with this comparatively small number of atoms take place before a macroscopic observer in a unique (unambiguous) manner, we cannot say that we have here reached a true state of "elementary chaos." If we now ask as to the minimum number of atoms necessary to make the process an irreversible one, the answer is, as many as are necessary to form determinate mean values which will define the progress of the state in the macroscopic sense. Only for these mean values does the Second Law possess significance; for these, however, it is perfectly exact, just as exact as the theorem of probability, which says that the mean value of numerous throws with one cubical die is equal to 3½.

We may now properly infer from all these views that the state of "elementary chaos" (or "molekular ungeordnete" motion) is the necessary condition for adequate haphazard and makes the application of the Theory of Probabilities possible.

[4]On p. 133 of Wärmestrahlung PLANCK says, "only measurable mean values are kontrollierbar," and this may help us to get the meaning here.

[5]In BOLTZMANN'S H-Theorem we have a process (consisting of a number of separately reversible processes) which is irreversible in the aggregate.

[6]Nature, Vol. LI, p. 78, Nov. 22, 1894.

(3) Settled and Unsettled States; Distinction between Final Stage

of Elementary Chaos and its Preceding Stages

The immediate purpose in the next few pages is to establish the (a) distinction between the successive stages of "elementary disorder" (chaos) as they develop in their inevitable passage [Pg 18] from "abnormal" conditions to the final and so-called "normal" condition of thermal equilibrium and, furthermore, (b) to show that each of these stages is "elementar-ungeordnet" and (c) that in each one sufficient haphazard prevails to permit the legitimate application of the Theory of Probabilities.

We will first describe the unsettled (abnormal) and settled (normal) states, respectively. When we consider the general state of a gas "we need not think of the state of equilibrium, for this is still further characterized by the condition that its entropy is a maximum. Hence in the general or unsettled state of the gas an unequal distribution of density may prevail, any number of arbitrarily different streams (whirls and eddies) may be present, and we may in particular assume that there has taken place no sort of equalization between the different velocities of the molecules. We may assume beforehand, in perfectly arbitrary fashion, the velocities of the molecules as well as their co-ordinates of location. But there must exist (in order that we may know the state in the macroscopic sense), certain mean values of density and velocity, for it is through these very mean values that the state is characterized from the macroscopic standpoint." The differences that do exist in the successive stages of disorder of the unsettled state are mainly due to the molecular collisions that are constantly taking place and which thus change the locus and velocity of each molecule.

We may now easily describe the settled state as a special case of the unsettled one. In the settled state there is an equal distribution of density throughout all the elementary spaces, there are no different streams (whirls or eddies) present, and an equal partition of energy exists for all the elementary spaces. For it thermal equilibrium exists, the entropy is a maximum, and temperature of the state has now a definite meaning, because temperature is the mean energy of the molecules for this state of equilibrium. The condition is said to be a "stationary" or permanent one, for the mean values of the density, [Pg 19] velocity, and temperature of this particular aggregate no longer change, although molecular collisions are still constantly occurring.

Well-known examples of the unsettled state of a system are: The turbulent state with its different streams, whirls, and eddies, the state in which the potential and kinetic energy is unequally distributed; for instance, when one part is at a high pressure and another part at a lower pressure, when one part is hotter than another part, and when unmixed gases are present in a communicating system.

A more specific feature of the unsettled state may be found

in the accompaniment to BURBURY'S condition (already mentioned

at bottom of p. 15) where it is intimated that

(at the start and after collision) all directions of the relative velocity

may not be equally likely.

When such differences have all disappeared to the extent that equal

elementary spaces possess their equal shares of the different particles,

velocities, and energies, the system will be a settled one, be in

thermal equilibrium, and will possess a maximum entropy and a definite

temperature. Moreover, BURBURY'S condition is here fully

satisfied.

At this point we again call attention to the fact, that in both the unsettled and settled states of a system all conceivable micro-states are not equally likely to obtain. On p. 19 mention was made that the unsettled and the settled state each possessed a host of conceivable micro-states which agreed with the characteristic averages of their respective macro-states (the unsettled and the settled ones), and yet in each set some of these led subsequently to events which did not accord with experience. Therefore for both the unsettled and the settled state we must limit the manifold character of their micro-states by eliminating all those micro-states which lead to results contrary to experience. This is accomplished by assuming the hypothesis of "elementary-disorder" (elementar-ungeordnet) to obtain for the unsettled as well as the settled state. Now so far as the haphazard [Pg 20] character of the remaining motions are concerned, we might stop right here, for the very nature of this hypothesis insures results in harmony with experience, i.e., with the undisturbed operation of the laws of probability.

But if we do not stop here, preferring to examine some of the special

features of fortuitous motion, as detailed on pp. 10,

13, 14 and 17,

we still see that by this hypothesis we have not removed the haphazard

character of the remaining motions in either the unsettled or the settled

state. For instance, we have not removed BURBURY'S condition . We must

remember, too, that in PLANCK'S briefest statement of "elementary disorder"

(bot. of p. 11), two important features of haphazard

are emphasized, viz.: the independence and great number of the

constituents. BOLTZMANN in his Gas Theorie of course considers the

special features which underlie the application of the Calculus of

Probabilities; thus he says they are, the great number of molecules and

the length of their paths, which together make the laws of the collision

of a molecule in a gas independent of the place where it collided

before. Neither has the introduction of the hypothesis of "elementary

disorder" done away with these special features. There have simply been

excluded from consideration such pre-computed and prearranged

regularities in the paths and directions of molecules as purposely

interfere with the operation of the laws of probability. We are still

free to consider all the imaginable positions and velocities of the

individual molecules which are compatible with the mean velocity,

density, and temperature properly characteristic of each stage of the

passage from the unsettled to the settled state. For adequate haphazard

we only need the assumption that the molecules fly so irregularly as to

permit the operation of the laws of probabilities. Such a presentation

as this of course calls for complete trust that all the specified

requirements have been adequately met and BOLTZMANN'S eminence as a

mathematical physicist and the endorsement of his peers must be

our guarantee for such confidence and trust.

[Pg 21]

Before closing this discussion of unsettled and settled states we will insert here two remarks, really at this stage, anticipatory in their nature. The first is, that under the limitation imposed by our supplementary hypothesis of "elementary chaos," the very sharpest definition of any macro-state is the number of its possible micro-states. This is evidently the number of permutations, possible with the given locus and velocity elements under the restriction imposed above. Later on we will find that this number of possible micro-states is smaller for the unsettled state than for the settled one. This gives us a clean-cut distinction between the two states contemplated. The second remark is that the inevitable change in the system as a whole is always from the less probable to the more probable, is a passage from an unsettled state of the system to its settled state and this is here synonymous with the growth of the number of possible micro-states. It is this difference between the initial and final states which constitutes the universal driving motive in all natural events.

(1) The Probability Concept, its Usefulness in the Past, its Present

Necessity, and its Universality.

An indication of its essential value in this physical discussion is evidenced by the fact that we have almost unwittingly been forced to constantly refer to it in all of our preliminaries. But when this concept is first broached to a student, he feels about it like the "man in the street"; it is by the latter regarded as a matter of chance and hence of uncertainty and unreliability; moreover, the latter knows in a vague way that the subject has to do with averages, that it is often of a statistical nature, and knows that statistics in general are widely distrusted. The student is at [Pg 22] first likely to share these views with said man in the street, and at best feels that its introduction is of remote interest, far fetched, and tends to hide and dissipate the kernel of the matter. The student must disabuse himself of these false notions by reflecting how much there is in Nature that is spontaneous, in other words, how many events there are in which there is a passage from a less probable to a more probable condition and that he cannot afford to despise or ignore a Calculus which measures these changes as exactly as possible.

In this connection BOLTZMANN says: (W. S. B. d. Akad. d. Wiss., Vol. LXVI, B 1872, p. 275).

"The mechanical theory of heat assumes that the molecules of gases are in no way at rest but possess the liveliest sort of motion, therefore, even when a body does not change its state, every one of its molecules is constantly altering its condition of motion and the different molecules likewise simultaneously exist side by side in most different conditions. It is solely due to the fact that we always get the same average values, even when the most irregular occurrences take place under the same circumstances, that we can explain why we recognize perfectly definite laws in warm bodies. For the molecules of the body are so numerous and their motions so swift that indeed we do not perceive aught but these average values. We might compare the regularity of these average values with those furnished by general statistics which, to be sure, are likewise derived from occurrences which are also conditioned by the wholly incalculable co-operation of the most manifold external circumstances. The molecules are as it were like so many individuals having the most different kinds of motion, and it is only because the number of those which on the average possess the same sort of motion is a constant one that the properties of the gas remain unchanged. The determination of the average values is the task of the Calculus of Probabilities. The problems of the mechanical theory of heat are therefore problems in this calculus. It would, however, be a mistake to think any uncertainty [Pg 23] is attached to the theory of heat because the theorems of probability are applied. One must not confuse an imperfectly proved proposition (whose truth is consequently doubtful) with a completely established theorem of the Calculus of Probabilities; the latter represents, like the result of every other calculus, a necessary consequence of certain premises, and if these are correct the result is confirmed by experience, provided a sufficient number of cases has been observed, which will always be the case with Heat because of the enormous number of molecules in a body."

To become more specific we will mention some of the problems to which the Theory of Probabilities has been profitably applied. In business to life and fire insurance; in engineering to reducing the inevitable errors of observations by the Method of Least Squares; and in physics to the determination of Maxwell's Law of the distribution of velocities. The results thus obtained are universally trusted and accepted by experts. Why then should this Calculus not be applicable to the more general natural events?

In this connection consider some of its good points: (a) It eliminates from a problem the accidental elements if the latter are sufficiently numerous; (b) it deals legitimately with averages; (c) it involves combination considerations other than averages; (d) it is available for non-mechanical as well as mechanical occurrences and thus (e) has a capacity for covering the whole range of natural events, giving it a character of universality which is now its most valuable asset.

As an example of this we may instance BOLTZMANN'S deservedly famous H-theorem, which establishes the one-sidedness of all natural events.[7] Concerning it, this master in mathematical physics says:

"It can only be deduced from the laws of probability that, if the

initial state is not especially arranged for a certain purpose, the

[Pg 24]

probability that decreases is always greater than that it

increases. In this connection we may add that BOLTZMANN looked forward

to a time, "when the fundamental equations for the motion of individual

molecules will prove to be merely approximate formulas, which give

average values which, according to the Theory of Probabilities, result

from the co-operation of very many independently moving individuals

constituting the surrounding medium, for example, in meteorology the

laws will refer only to average values deduced by the Theory of

Probabilities from a long series of observations. These individuals must

of course be so numerous and act so promptly that the correct average

values will obtain in millionths of a second."

To further strengthen our faith we may point out that the probability method has been successfully used to determine unique results from complicated conditions and has been employed for the general treatment of problems. In the case before us it has solved the entropy puzzle which has exercised physicists, as well as engineers, for decades, and it has thereby emancipated the Second Law from all anthropomorphism, from all dependence on human experimental skill. When we take the broadest possible view of its character, this Calculus enables us to read the present riddle of our universe, namely, why it is in its present improbable state. We have therefore in this Calculus an engine for investigation which is of great power and is likely to play a large part in the future in the ascertainment of physical truth. Of course it must then be in the hands of masters. It is they and they alone who can properly and adequately interpret such a physical problem as the one before us. In scientific work our last court of appeal must be Nature, and we therefore say: The best justification for the use of the Theory of Probabilities in our problem is that its results are in such complete accord with the facts.

In dealing with this physical engine of investigation, we must again call attention to some of the features of haphazard necessary [Pg 25] for its legitimate application. Of course the statement of these features will vary with the mechanical or non-mechanical character of the problem to which it is applied. As we are here dealing mainly with the former, we will limit ourselves to its features: (a) The elements dealt with must be very numerous, strictly speaking, infinite; (b) as a phase of (a) we may say also that when we speak of the probability of a state we express the thought that it can be realized in many different ways; (c) when we speak of the relative directions of a pair of molecules all possible directions must be considered; (d) we must so weight the elements say, in (a), (b), and (c) that they are equally likely; (e) every one of the entering elements must possess constituents of which each individual is independent of every other; for instance, (f) in a gas the place where a molecule collided must be independent of the place where it collided before. In our physical problem all of these features are not always realized; for instance, the number of particles of gas are only finite instead of being infinite; again, all relative velocities after collision of a pair of molecules are not equally likely; BOLTZMANN and BURBURY provide for these shortcomings by very truly asserting that in actual cases we are not dealing with isolated systems, that the surrounding walls are not impervious to external influences, and that the latter come at haphazard without regard to internal state of the system at the time, thus renewing and maintaining the desired state of haphazard.

Methods. This Calculus works largely by the determination of averages and its results must be interpreted accordingly. Moreover, for the present we will take a popular, practical view of these results and consider a very great improbability as equivalent to an impossibility. Numerical computations are essential in most uses of this Calculus, but here they will be entirely omitted.

[7]The H-theorem considers a process (consisting of a number of separate, reversible processes) which is irreversible in the aggregate.

[Pg 26]

(2) What is Meant by the Probability of a State? Example

To come back to the matter in hand we will now show what is here meant by the probability of any state.

When we speak of the probability of a particular

"elementar-ungeordnete" state, we thereby imply that this state may be

variously realized. For every state (which contains many like

independent constituents) corresponds to a certain "distribution,"

namely, a distribution among the gas molecules of the location

co-ordinates and of the velocity components. But such a distribution is

a permutation problem, is always an assignment of one set of like

elements (co-ordinates, velocity components) to a different set of like

elements (molecules). So long as only a particular state is kept in

view, it is of consequence as to how many elements of the two sets are

thus interchangeably assigned to each other and not at all as to which

individual elements of the one set are assigned to particular individual

elements of the other set.[8] Then a particular state may be realized by a great

number of assignments individually differing from one another,

but all equally likely to occur.[9] If with PLANCK we call such an

assignment a "complexion,"[10] we may now say that in general a

particular state contains a large number of different, but equally

likely, complexions. This number, i.e., the number of the complexions

included in a given state can now be defined as the probability

of the state.[11]

Let us present the matter in still another form. BOLTZMANN

derives the expression for magnitude of the probability by at

[Pg 27]

once distinguishing between a state of a considered system and

the complexion of the considered system. A state of the system is

determined by the law of locus and velocity distribution, i.e., by a

statement of the number of particles which lie in each elementary

district of space and the number of particles which lie in each

elementary velocity realm, assuming that among themselves these

districts and realms are alike and each such infinitesimal element still

harbors very many particles. Accordingly a particular state of the

system embraces a very large number of complexions. For if any two

particles belonging to different regions swap their co-ordinates and

velocities, we get thereby a new complexion, but still the same state.

Now BOLTZMANN assumes all complexions to be equally probable and

therefore the number of complexions included in a particular state

furnishes at the same time the numerical value for the Probability of

the state in question. Illustration taken from the simultaneous

throwing of two, ordinary, cubical dice. Suppose that the sum is to be 4

for each throw, then this can be realized by the following three

complexions:

First cube shows 1, the second cube shows 3;

First cube shows 2, the second cube shows 2;

First cube shows 3, the second cube shows 1.

The requirement that the sum on the two cubes shall be 2, however, involves but one complexion. Under the circumstances therefore the probability of throwing the sum 4 is three times as great as throwing the sum 2.

In closing this part of our presentation, we may make what is now an almost obvious remark. The long-lasting difficulty in giving a physical meaning to entropy and the Second Law is due to the fact of its intimate dependence on considerations of probability. It is only quite recently that such considerations have attained the dignity of a great working principle in the domain of Physics.

[9]LIOUVILLE'S theorem is the criterion for the equal possibility or equal probability of different state distributions.

[10]A happy term, but one not in vogue among English-speaking physicists.

[11]The identity of entropy with the logarithm of this state of probability

is established by showing that both are equal to the same expression. It

seems an easy step from this derivation to BOLTZMANN'S definition of

entropy as the "measure of the disorder of the motions in a system of mass

points."

[Pg 28]

(1) Existence, Definition, Measure, Relations, Properties, and Scope of Irreversibility and Reversibility.

In establishing the existence of irreversibility, we can use one or both of the two general methods of approaching any physical problems (see Introduction, pp. 2, 3) we can approach by way of the atomic theory or by considering the behavior of aggregates in Nature. Enough has already been said in this presentation of atomic behavior and arrangements to justify the statement that irreversibility is not inherent in the elementary procedures themselves but in their irregular arrangement. The motion of each atom is by itself reversible, but their combined mean effect is to produce something irreversible.[12]

This has been rigorously demonstrated by BOLTZMANN'S H-theorem for molecular physics, and when sufficiently general co-ordinates are substituted it is also available for the other domains of natural events. When we consider the behavior of aggregates we recognize at once a general, empirical law, which has also been called the one physical axiom, namely, that all natural processes are essentially irreversible. When we use this method of approach we confessedly rest entirely on experience, and then it does not make any logical difference whether we start with one particular fact or another, whether we start with a fact itself or its necessary consequence: For instance we may recognize that the universe is permanently different after a frictional event from what it was before, or we may start, as PLANCK does, by putting forward the following proposition:

"It is impossible to construct an engine which will work in [Pg 29] a complete cycle,[13] and produce no effect except the raising of a weight and the cooling of a heat reservoir."[14]

Now up to this time no natural event has contradicted this theorem or its corollaries. The proof for it is cumulative, wholly experiential and therefore exactly like that for the law of conservation of energy.

Returning to irreversibility, the matter for immediate discussion, we premise that it will here clarify and simplify our ideas if we consider all the participating bodies as parts of the system experiencing the contemplated process. It is in this sense that we must understand the statement: Every natural event leaves the universe different from what it was before. Speaking very generally, we may say that in this difference lies what we call irreversibility.

Now irreversibility is what really does exist, everywhere in Nature, and our idea of reversibility is only a very convenient and fruitful fiction; our conception of reversibility must, therefore, ultimately be derived from that of irreversibility.

"A process which can in no way be completely reversed is termed irreversible, all other processes reversible. That a process may be irreversible, it is not sufficient that it cannot be directly reversed. This is the case with many mechanical processes which are not irreversible (See p. 32). The full requirement is, that it be impossible, even with the assistance of all agents in Nature, to restore everywhere the exact initial state when the process has once taken place."

Examples of irreversible processes, which involve only heat and mechanical phenomena, may be grouped in four classes:

(a) The body whose changes of state are considered is in contact with bodies whose temperature differs by a finite amount [Pg 30] from its own. There is here flow of heat from the hotter to the colder body and the process is an irreversible one.

(b) The body experiences resistance from friction which develops heat; it is not possible to effect completely the opposite operation of restoring the whole system to its initial state.

(c) The body expands without at the same time developing an amount of external energy which is exactly equal to the work of its own elastic forces. For example, this occurs when the pressure which a body has to overcome is essentially (i.e., finitely) less than the body's own internal tension. In such a case it is not possible to bring the whole system (of which the body is a part) completely back into its initial state. Illustrations are: steam escaping from a high-pressure boiler, compressed air flowing into a vacuum tank, and a spring suddenly released from its state of high tension.

(d) Two gases at the same pressure and temperature are separated by a partition. When this is suddenly removed, the two gases mix or diffuse. This too is an essentially irreversible process.

Outside of chemical phenomena, we may instance still other examples of irreversible processes: flow of electricity in conductors of finite resistance, emission of heat and light radiation, and decomposition of the atoms of radio-active substances.

"Numerous reversible processes can at least be imagined, as, for instance, those consisting throughout of a succession of states of equilibrium, and therefore directly reversible in all their parts. Further, all perfectly periodic processes, e.g., an ideal pendulum or planetary motion, are reversible, for, at the end of every period the initial state is completely restored. Also, all mechanical processes with absolutely rigid bodies and incompressible liquids, as far as friction can be avoided, are reversible. By the introduction of suitable machines with absolutely unyielding connecting-rods, frictionless joints, and bearings, inextensible belts, etc., it is always possible to work the machine in such a [Pg 31] way as to bring the system completely into its initial state without leaving any change in or out of the machines, for the machines of themselves do not perform any work."

Other examples of such reversible processes are: Free fall in a vacuum, propagation of light and sound waves without absorption and reflection and unchecked electrical oscillations. All the latter processes are either naturally periodic, or they can be made completely reversible by suitable devices so that no sort of change in Nature remains behind; for example, the free fall of a body by utilizing the velocity acquired to bring the body back to its original height, light and sound waves by suitably reflecting them from perfect mirrors.

[12]This would seem to imply the existence of a broader principle, the properties of systems as a whole are not necessarily found in their parts.

[13]Such an engine if it would work might be called "perpetual motion of the second kind."

[14]The term perpetual is justified because such an engine would possess the most esteemed feature of perpetual motion—power production free of cost.

(2) Character of Process Decided by the Limiting States

"Since the decision as to whether a particular process is irreversible or reversible depends only on whether the process can in any manner whatsoever be completely reversed or not, the nature of the initial and final states, and not the intermediate steps of the process, entirely settle it. The question is, whether or not it is possible, starting from the final state, to reach the initial one in any way without any other change.... The final state of an irreversible process is evidently in some way discriminate from the initial state, while in reversible processes the two states are in certain respects equivalent.... To discriminate between the two states they must be fully characterized. Besides the chemical constitution of the systems in question, the physical conditions, viz., the state of aggregation, temperature, and pressure in both states, must be known, as is necessary for the application of the First Law."

"Let us consider any process whatsoever occurring in Nature.

This conducts all participating bodies from a particular initial

condition to a certain final condition

. The process is

either reversible or irreversible, any third possibility being

[Pg 32]

excluded. But whether it is reversible or irreversible depends solely

and only on the constitution of the two states

and

, not upon

the other features of the course; after state

has been attained,

we must here simply answer the question whether the complete return to

can or cannot be effected in any manner whatsoever. Now if such

complete return from

to

is not possible then evidently state

in Nature is somehow distinguished from state

. Nature may be

said to prefer state

to state

. Reversible processes are a

limiting case; here Nature manifests no preference and the passage from

the one to other can take place at pleasure, in either direction. [In

the common case of isentropic expansion from

to

, there is no

exchange of heat with the outside; external work is performed at the

expense of the inner energy of the expanding body. When state

is

attained we can effect a complete return to

by compressing

isentropically, thus consuming the external work performed on the trip

from

to

and restoring the internal energy of the body.]

"Now it becomes a question of finding a physical magnitude whose amount will serve as a general measure of Nature's preference for a state. This must be a magnitude which is directly determined by the state of the contemplated system, without knowing anything of the past history of the system, just as is the case when we deal with the state's energy, volume, etc. This magnitude would possess the property of growing in all irreversible processes, while in all reversible processes it would remain unchanged. The amount of its change in a process would furnish a general measure for the irreversibility of the process."

"Now R. CLAUSIUS really found such a magnitude and called it entropy. Every bodily system possesses in every state a particular entropy, and this entropy designates the preference of nature for the state in question; in all the processes which occur in the system, entropy can only grow, never diminish. If we wish to consider a process in which [Pg 33] said system is subject to influences from without, we must regard the bodies exerting such influences as incorporated with the original system and then the statement will hold in the above given form."

From what has gone before it is evident that the following commonly drawn conclusions are correct:

An irreversible process is a passage from a less probable to a more probable state of the system.

An irreversible process is a passage from a less stable to a more stable state of the system.

An irreversible process is essentially a spontaneous one, inasmuch as once started it will proceed without the help of any external agency.

We have in a general way reached the conclusion that entropy is both the criterion and the measure of irreversibility. But now let us become more specific and go more into certain details, namely, the common features in all irreversibility. The property of irreversibility is not inherent in the elementary occurrences themselves, but only in their irregular arrangement. Irreversibility depends only on the statistical property of a system possessing many degrees of freedom, and is therefore essentially based on mean values; in this connection we may repeat an earlier statement, the individual motions of atoms are in themselves reversible, but their result in the aggregate is not.

(3) All the Irreversible Processes Stand or Fall Together

This is proved with the help of the theorem (p. 30) which denies the possibility of perpetual motion of the second kind.[15] The argument is this: take any case in any one of the four classes of irreversible processes given on p. 31. Now if this [Pg 34] selected case is in reality reversible, i.e., suppose a method were discovered of completely reversing this process and thus leave no other change whatsoever, then combining the direct course of the process with this latter reversed process, they would together constitute a cyclical process, which would effect nothing but the production of work and the absorption of an equivalent amount of heat. But this would be perpetual motion of the second kind, which to be sure is denied by the empirical theorem on p. 30. But for the sake of the argument we may just now waive said impossibility; then we would have an engine which, co-operating with any second (so-called), irreversible process, would completely restore the initial state of the whole system without leaving any other change whatsoever. Then under our definition on p. 30 this second process ceases to be irreversible. The same result will obtain for any third, fourth, etc. So that the above proposition is established. "All the irreversible processes stand or fall together." If any one of them is reversible all are reversible.[16]

[15]At this stage we appreciate that any irreversible process

is a passage from a state of low entropy to a state

of high

entropy. We may simplify our proof by considering the return passage

from

to

to in part occur isothermally and in part

isentropically; then external agencies must produce work and absorb an

equivalent amount of heat.

[16]With the help of the preceding footnote this argument can be followed through in detail for each of the cases enumerated on p. 31; only the complicated case of diffusion presents any difficulty.

(4) Convenience of the Fiction, the Reversible Processes

A reversible process we have declared to be only an ideal case, a convenient and fruitful fiction which we can imagine by eliminating from an irreversible process one or more of its inevitable accompaniments like friction or heat conduction. But reversible (as well as irreversible) processes have common features. "They resemble each other more than they do any one irreversible process. This is evident from an examination of the differential equations which control them; the differential with respect to time is always of an even order, because the essential sign of time can be reversed. Then too they (in whatever domain of physics they may lie) have the common property that the Principle of Least Action [Pg 35] can represent all of them completely and uniquely determines the sequence of their events." They are useful for theoretical demonstration and for the study of conditions of equilibrium.

There is a certain, limited, incomplete sense in which we say that we can change from one state of equilibrium to another in a reversible manner. For example, we can, considering only the one converting (or intermediate) body, effect said change by a successive use of isentropic and isothermal change. But this ignores all but one of the participating bodies and this is not permissible if we strictly adhere to the true definition of complete reversible action.

We must remember too that no other universal measure of irreversibility exists than entropy. "Dissipation" of energy has been put forward as such a measure, but we know already of two irreversible cases where there is no change of energy, namely, diffusion and expansion of a gas into a vacuum. [Unavailable, distributed, scattered energy are terms which could be used here, free from all objection.]